Sorry, what was the question again?

And again. And again. And again...

The design of any high quality clinical study starts with the clinical question it's intended to help answer.

So, what's the question?

This is a common refrain from people like me, whose job it is to help design studies. It’s basically a trope at this point. But as I've gained more experience helping to design studies, I've come to realize that this question is too open-ended for clinical research. It needs more structure. It needs some constraints on our boundless creativity. So now I ask my colleagues a slightly different question:

Is your clinical question about description, prediction, causality, or measurement?

So before we talk about your study, I would like you to please think about how your research question(s) can be stated in these terms.

Description

Most of us working in medical and health research want to fix problems and improve people's lives. But before we can fix a problem, we not only need to know it exists, we need to generate convincing evidence of its existence so that decision makers will help you do something about it. This is where description comes in. How many cases of influenza were there? What’s the mean birth weight? What’s the range of times it takes patients appearing to the ED to be admitted or discharged? Unfortunately these questions aren't likely to land you in a glossy “high impact” medical journal. But don't let that stop you from embracing ”simple” descriptive research - these are often the most important, impactful questions that we as scientists can help answer.

However, despite their apparent simplicity, descriptive studies still require solid scientific methods. That includes carefully thinking about the population of interest, preferably in the context of downstream decision making - how might your findings actually be used? It means designing an appropriate way to sample from that population. And it means correctly analyzing your data and then interpreting the results in light of any uncertainty.

If you aren't comfortable with what all this entails, that's ok. Here are some resources to help you get started:

Epidemiology in the Right Direction: The Importance of Descriptive Research

A Framework for Descriptive Epidemiology

Invited Commentary: The Importance of Descriptive Epidemiology

On the Need to Revitalize Descriptive Epidemiology

Prediction

Prediction is all about using the info we have today to make a better guess about what might happen tomorrow. The two main prediction tasks in medicine are diagnosis and prognosis. To help you, I need to know about the clinical decision making process you hope to improve with better diagnostic or prognostic information; and what information you actually have available to make that prediction.

Unlike Description, there is potentially enough whizbang involved in prediction problems to help you chase prestige via publication, especially in this big-data-plus-artificial-intelligence-hyped-up era we live in. This has incentivized the mass production of clinical prediction tools, the vast majority of which will have zero benefit, often because they aren't explicitly developed in the context of a clearly defined decision making process. Also, because prediction tools are interventions, they come with risks of harm. So while the link to decision making might be unavoidably vague for studies aimed at description, it must be crystal clear for those aimed at prediction.

The links below will help you understand what it takes to actually make a useful clinical prediction model.

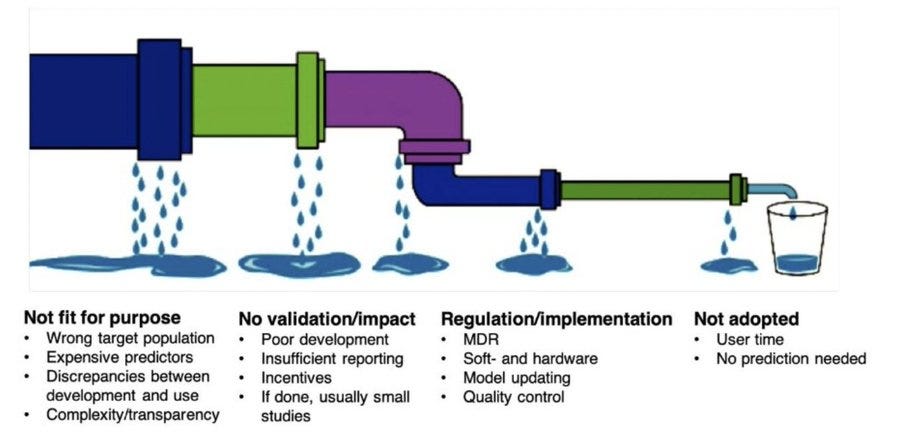

The leaky clinical-prediction-tool implementation pipeline:

Prediction models for diagnosis and prognosis of covid-19: systematic review and critical appraisal

PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies

Clinical prediction models and the multiverse of madness

Three myths about risk thresholds for prediction models

Causality

Causal questions are about understanding what happens in response to a change in some other factor. In medicine, the “other factor” is usually a medical treatment, but it could also be a policy, some advice, the implementation of a new diagnostic or prognostic tool, etc. Randomized controlled trials are of course our most useful tool for understanding the causal effects of medical treatments, but we can often shed light on causal questions using observational study designs such as cohort and case control studies.

Critically, if you are interested in answering a causal question, you need to say so clearly. No mumbling about associations. This is especially important for observational studies since they are also often used for developing prediction tools, and how you deal with covariates will be very different depending on whether your immediate goal is prediction or causality.

The C-Word: Scientific Euphemisms Do Not Improve Causal Inference From Observational Data

The Table 2 Fallacy: Presenting and Interpreting Confounder and Modifier Coefficients

A Structural Approach to Selection Bias

Target Validity and the Hierarchy of Study Designs

Triangulation in aetiological epidemiology

You might also be interested in understanding whether the effects of a treatment depend on other factors, e.g. does adjuvant immunotherapy work better in patients with some biomarker? We call this heterogeneity of treatment effects (HTE). This is another important research theme, but one full of misconceptions and flawed thinking, and something that is particularly hard to convincingly evidence, so beware.

Mastering variation: variance components and personalised medicine

Can we learn individual-level treatment policies from clinical data?

Personalized evidence based medicine: predictive approaches to heterogeneous treatment effects

Measurement

Being able to measure the things we want to describe, predict and treat is obviously fundamental. It's so fundamental that, in some respects, all clinical research might be thought of as measurement. For example:

A description of the number of influenza cases detected in a place over a time period could be viewed as a measurement of that place and time.

A diagnosis is a measurement of the presence of illness.

A risk prediction score is a measurement of the future.

The entire apparatus of a RCT is an instrument for measuring treatment effects.

We can also flip the semantics and say that measurement is just another word for prediction - that measurements are just predictions of a truth that we can never truly directly observe. Even something as seemingly easy to observe as the length of a newborn infant isn't. Just ask literally anyone who has ever tried.

But despite the overlapping semantics, measurement deserves its own section here. In particular, you might be interested in knowing if a new technique or device does a better job measuring something than whatever options are currently used in practice. You might also be interested in developing and/or evaluating patient reported outcome measures (PROMs) or other psycho-social constructs.

Other points

You aren't allowed to say “risk factor”

People often say their goal is to identify “risk factors”. But what does that mean? Some people use the term to indicate potential causes of outcomes. Then just say cause. Others use it to identify predictors of outcomes. Then just say predict. And, sadly, too many others use it as shorthand for factors that are “statistically associated” with outcomes. In this case, say nothing at all, since this “goal” has no clinical utility whatsoever (beyond what it might suggest about causation or prediction). So once you have framed your research question as description, prediction, causation or measurement, there is no longer a need to talk about risk factors. It's basically just a catch-all phrase to cover up muddy thinking.

Avoid sample size theatre

Finally, there is a very good chance that if you are coming to me to help design a study, you are going to ask about how to plan the sample size. The nitty gritty on how to do this will vary across research questions, so we can't cover the topic in detail here. However, before we talk, I want you to determine what the maximum possible sample size for the study could be given constraints on time and money. Experience says that this is almost always less than the sample size we'd want to fully meet our scientific goals. And that's ok, but let's not fool ourselves about the reality of the situation.

In some situations, not being able to reach the optimal sample size means you shouldn't proceed. For example, we wouldn't ask participants to take on significant risks or other burdens just to conduct a study we won't learn anything useful from. However, in very low risk/burden contexts, a small, exploratory pilot study might be justifiable indeed.

Consultancy link

If you are a clinical researcher associated with University College Cork and/or the South/Southwest Hospital Group and want to have a chat about study design and/or data analysis, please use this link to book a time using your @ucc.ie or @hse.ie email address.

Excellent read, thank you. Having designed a few studies, in medicine and even in unrelated fields, I appreciate the rigor required to get it right. And, thanks for the references. I'm creating a short-course where we're trying to teach a little bit of epidemiology and I've now got more background reading for the class!

I am a cardiovascular epidemiologist. As your article mentions, predicting/causal inference is a question I am frequently asked, and it is indeed a challenging one to articulate. One perspective I have been taught is that observational studies are not sufficient for drawing causal inferences, which is why ambiguous terms (such as risk factors or associations) are used (as you rightly point out, this should be avoided, and I fully agree). However, I am perplexed about how to choose terms after avoiding them. My understanding is that a reasonable causal inference requires the integration of many studies, including observational research, and information beyond the data. However, this seems to contradict what you are saying. I find both perspectives reasonable. Causal inference is complex, and honestly, I still do not understand how to conduct a perfect causal inference. The more literature I read, the more confused I become about what I am actually doing. Causal inference requires assessing the impact of interventions (is that right?), and it is also challenging in observational studies. Now I wonder if my analytical methods are problematic. I lack a reasonable analysis plan and methodology. If possible, could you provide me with some reference papers on epidemiological (observational study) practices?